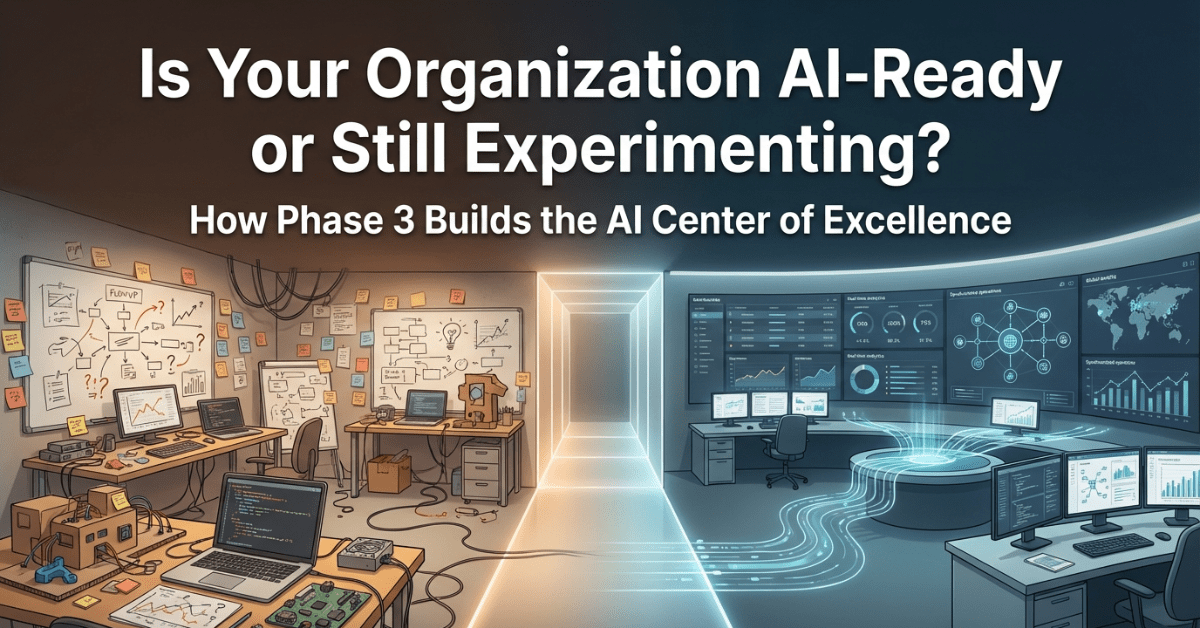

As organizations move from AI curiosity to real business impact, one truth becomes increasingly clear: pilots may prove what is possible, but governance determines what is sustainable. Many enterprises succeed in launching promising AI experiments but struggle to scale them responsibly, consistently, and securely. This is where Phase 3 of the AI Literacy Transformation Roadmap plays a defining role.

In the framework outlined in Closing the AI Literacy Gap: A Roadmap for Enterprise-wide Transformation, Phase 3, spanning Months 7 to 9, focuses on establishing an AI Center of Excellence (CoE). This phase represents the shift from experimentation to institutionalization, ensuring that AI adoption is no longer fragmented or tool-driven, but governed, scalable, and strategically aligned with business goals.

From Proof of Value to Operational Excellence

By the time organizations reach Phase 3, they have already completed:

Phase 1 (Months 1 to 3): Leadership alignment, foundational AI literacy, and strategic intent.

Phase 2 (Months 4 to 6): Targeted pilots designed to demonstrate tangible business value.

These pilots generate momentum and confidence. However, without a formal structure to support them, they risk remaining isolated successes. Different teams may adopt different tools, apply inconsistent governance, and follow varied ethical standards. Over time, this leads to duplication, risk exposure, and operational inefficiency.

Phase 3 addresses this precise inflection point by introducing the AI Center of Excellence as the enterprise’s central coordinating body for AI initiatives.

What Is an AI Center of Excellence

An AI Center of Excellence is a cross-functional structure that integrates learning, compliance, technology, and operational excellence into one unified governance model.

It does not exist as an isolated technical team. Instead, it functions as a strategic hub that aligns AI capability with enterprise objectives.

The purpose of the CoE is not to control innovation, but to enable responsible, scalable, and high-impact AI adoption across the organization.

What are the Core Responsibilities of the AI Center of Excellence

1. Policy Development, Bias Management, and Ethical Oversight

As AI becomes embedded in critical workflows, ethical use and risk management become non-negotiable. The CoE establishes:

Responsible AI policies

Bias detection and mitigation standards

Model transparency and explainability guidelines

Data privacy and regulatory compliance frameworks

This ensures that innovation does not outpace governance and that AI adoption strengthens trust rather than undermines it.

2. Standardization of AI Workflows and Vendor Evaluation

Without standards, AI efforts quickly become fragmented. The CoE defines:

Enterprise-wide AI development and deployment workflows

Security and data-handling protocols

Performance benchmarks and validation criteria

Objective frameworks for evaluating AI vendors and platforms

This standardization allows the organization to reuse success patterns, reduce duplication, control risk, and maintain consistent performance across business units.

3. Role-Specific AI Capability Pathways

AI literacy is not one-size-fits-all. The CoE designs structured learning pathways tailored to:

Leaders and managers: Strategic AI thinking, ethical governance, investment decision-making

Analysts and knowledge workers: Applied AI tools, decision augmentation, data interpretation

Frontline employees: Task-level AI usage, automation, and productivity enablement

By aligning learning to real job roles, the organization moves from generic awareness to practical, job-embedded AI capability.

4. Defining KPIs for Adoption, Productivity, and Governance

One of the most overlooked aspects of AI transformation is measurement. The CoE establishes balanced performance metrics across four dimensions:

Adoption: Utilization rates, tool penetration, workforce participation

Productivity: Cycle-time reduction, output quality, automation benefits

Business impact: Cost optimization, revenue influence, customer value

Governance: Compliance adherence, ethical incidents, model performance stability

These KPIs ensure that AI remains accountable to business outcomes while maintaining strong governance discipline.

Why Institutionalizing AI Through a CoE Matters

Establishing a CoE is what converts AI from an experimental capability into an organizational muscle. The benefits are both immediate and long term:

AI initiatives become coordinated instead of siloed

Compliance and ethics become systematic rather than reactive

Learning becomes structured rather than ad hoc

Scaling becomes repeatable instead of fragile

Leadership gains enterprise-level visibility into AI performance and value

Most importantly, the organization develops confidence in AI as a strategic capability, not merely a collection of tools.

The Strategic Timing of Phase 3

Positioning the AI CoE after pilots is intentional and critical. By Month 7:

Leadership has already seen early value

Workforce resistance is lower due to proven use cases

Data from pilots informs real governance and standards

Budgeting and resourcing decisions are evidence-based

This ensures that the CoE is not built on assumptions, but on operational reality and demonstrated value.

Key Challenges in Building an Effective AI CoE

While the CoE delivers powerful advantages, organizations must navigate several challenges carefully:

Balancing governance and agility: Excessive control can slow innovation, while too little oversight increases risk.

Cross-functional resourcing: The CoE must draw from IT, HR, learning, legal, compliance, and business units.

Defining the right success metrics: Overemphasis on short-term ROI can obscure long-term strategic value.

Managing cultural resistance: Employees may fear job displacement or loss of autonomy.

Adapting to rapid AI evolution: Governance cannot be static in a fast-changing technology landscape.

Successful organizations treat the CoE as a living system that continuously evolves rather than a fixed administrative unit.

A Practical Implementation Model

High-maturity organizations rarely run their AI Center of Excellence as a fully centralized “command-and-control” unit. Instead, they increasingly adopt a hub-and-spoke operating model that blends consistency with flexibility.

1. The Hub: Enterprise Governance and Shared Capability

The central hub is typically a cross-functional team that brings together AI, data, architecture, security, legal, compliance, and learning expertise. Its core responsibilities include:

Policies, ethics, and governance

The hub defines responsible AI guidelines, risk controls, and approval workflows, ensuring that AI use aligns with regulatory requirements and organizational values. Microsoft’s Cloud Adoption Framework, for example, recommends an AI CoE to centralize governance, monitor regulatory changes, and manage AI-related risks at the enterprise level.

Standards, reference architectures, and reusable assets

It creates standardized patterns for data pipelines, model lifecycle management, MLOps, and security controls, so teams do not reinvent the wheel for every new use case. Deloitte highlights this as a defining feature of the “AI operating model,” where an AI CoE institutionalizes reusable building blocks and best practices across the enterprise.

Enterprise learning frameworks and AI literacy

The hub designs capability frameworks and learning paths tailored to executives, managers, analysts, and frontline employees. Tredence’s guide on AI CoEs describes the hub as the place where people, process, and technology are integrated into a scalable AI enablement engine, rather than a purely technical function.

Portfolio and value management

It maintains a portfolio view of AI initiatives, prioritizing use cases, tracking outcomes, and ensuring alignment with strategic objectives. Microsoft and Deloitte both emphasize the CoE’s role in continuously connecting AI projects with business value, rather than allowing isolated experiments to proliferate.

In short, the hub sets the “rules of the game,” provides shared services, and ensures AI remains safe, ethical, and strategically aligned.

2. The Spokes: Business-Embedded Innovation and Execution

Around this hub sit the business-unit spokes: domain teams in areas such as operations, finance, HR, customer service, or manufacturing that are closest to real business problems and data.

Their role is to:

Identify and refine high-value use cases

Spokes surface opportunities grounded in domain reality, from intelligent document processing to demand forecasting or agent-assist tools. Gartner’s work on analytics CoEs and self-service analytics describes this as placing “analytics franchises” inside each department, connected back to a central analytics CoE that provides shared standards and capabilities.

Co-create and localize solutions

Spokes work with the hub to adapt enterprise patterns to local needs: integrating models into existing workflows, CRMs, ERPs, and line-of-business tools. This echoes the “federated” or hub-and-spoke structures recommended in several CoE implementation guides, where departments retain agility while benefiting from central expertise.

Champion adoption and change management

Local “AI champions” or “departmental owners” educate peers, collect feedback, and drive behavioral change. Valorem’s CoE governance guide notes that hub-and-spoke models scale well because departmental champions accelerate adoption without sacrificing shared standards.

Feed learning back to the hub

Lessons from real deployments – failure modes, user behavior, edge cases – are passed back to the hub to refine policies, patterns, and training content. This creates a continuous learning loop rather than one-directional governance.

3. Why the Hub-and-Spoke Model Works

This structure ensures enterprise alignment while preserving business-unit agility:

The hub provides strategic direction, risk control, and reusable assets, preventing fragmented or ungoverned AI adoption – a risk repeatedly flagged in Microsoft’s Cloud Adoption Framework and multiple AI governance playbooks.

The spokes keep AI grounded in real business contexts, helping avoid the perception of a distant, bureaucratic central team that is out of touch with frontline needs – a problem documented in CoE and analytics operating-model case studies.

Recent practitioner literature on generative AI CoEs and “agentic AI” CoEs converges on the same point: organizations that combine a strong central AI hub with empowered, domain-focused spokes are better able to scale AI safely, rapidly, and with measurable impact.

Crossing the Threshold: How Phase 3 Converts AI Experiments into Enterprise Capability

When Phase 3 is executed effectively, organizations experience a decisive shift from experimentation to operational maturity. The establishment of an AI Center of Excellence brings structure, continuity, and discipline to AI adoption, enabling outcomes that include:

Institutionalized AI governance

Repeatable deployment and scaling processes

Workforce confidence in AI-enabled work

Measurable productivity gains and business impact

Reduced ethical, legal, and operational risk

A sustained pipeline of AI-driven innovation

At this stage, AI is no longer perceived as a disruptive force to be cautiously tested. It becomes a trusted enterprise capability — one that strengthens human judgment, enhances operational efficiency, and informs strategic decision-making across the organization.

Phase 3 is the true pivot point of the AI literacy roadmap. It is the moment when organizations move from isolated initiatives to enterprise-wide competence. By building an AI Center of Excellence, enterprises ensure that their AI efforts remain coordinated, compliant, scalable, and consistently value-driven.

In a world where AI adoption is accelerating faster than organizational readiness, the absence of strong governance is no longer a neutral gap — it is a strategic risk. The AI CoE is the structure that transforms that risk into sustained competitive advantage.

—RK Prasad (@RKPrasad)